Use Linux Traffic Control as impairment node in a test environment (part 1)

Rigorously testing a network device or distributed service requires complex, realistic network test environments. Linux Traffic Control (tc) with Network Emulation (netem) provides the building blocks to create an impairment node that simulates such networks.

This three-part series describes how an impairment node can be set up using Linux Traffic Control. In this first blog post, Linux Traffic control and its queuing disciplines are introduced. The second part will show which traffic control configurations are available to impair traffic and how to use them. The third and last part will describe how to get an impairment node up and running!

Emulation by elimination

How does your product operate in a realistic (or high latency) environment? Well let’s find out.

- Maybe we can use the production network, which obviously contains a realistic latency environment? “Out of question!”, responds the operations department.

- Perhaps we could engineer a purpose-built network with the desired characteristics? “Out of budget!”, yells your project manager.

This leaves us with emulation: simulating an (arbitrarily complex) network by configuring the desired network impairments in software.

Linux to the rescue

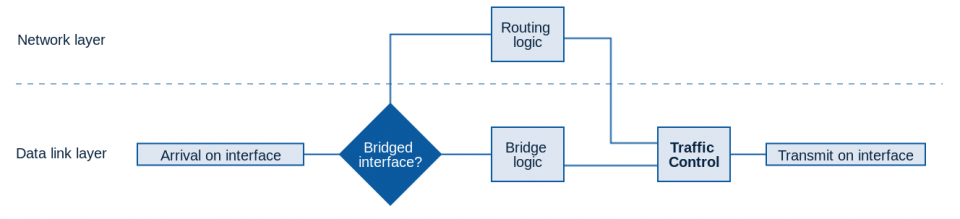

Network nodes (such as IP routers) often run a Linux based operating system. The Linux kernel offers a native framework for routing, bridging, firewalling, address translation and much else.

Before a packet leaves the output interface, it passes through Linux Traffic Control (tc). This component is a powerful tool for scheduling, shaping, classifying and prioritizing traffic.

The network emulation (netem) project adds queuing disciplines that emulate wide area network properties such as latency, jitter, loss, duplication, corruption and reordering.

A first glance

Suppose we have an impairment node device (any Linux based system) which manipulates traffic between its two Ethernet interfaces eth0 and eth1 to simulate a wide area network. An extra interface (e.g. eth2) should be available to configure the device out-of-band.

We can check the default queuing disciplines and traffic classes using the tc (man page) command:

$ tc qdisc show qdisc pfifo_fast 0: dev eth0 root refcnt 2 bands 3 priomap 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1 qdisc pfifo_fast 0: dev eth1 root refcnt 2 bands 3 priomap 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1 qdisc pfifo_fast 0: dev eth2 root refcnt 2 bands 3 priomap 1 2 2 2 1 2 0 0 1 1 1 1 1 1 1 1 $ tc class show <no output>

The default traffic control configuration consists of a single queuing discipline pfifo_fast (man page, read more) and contains no user defined traffic classes. This queuing discipline works more or less as FIFO, but looks at the IP ToS bits to prioritize certain packets. Each line in the output above should be read as follows:

Interface eth0 has a queuing discipline pfifo_fast with label 0: attached to the root of its qdisc tree. This qdisc classifies and prioritizes all outgoing packets by mapping their 4-bit IP ToS value (i.e. the 16 listed values) to native Linux priority bands 0, 1 or 2. Traffic in band 0 is always served first, then the second band is emptied of pending traffic, before moving on to band 2. Within a band, all packets are sent in a FIFO manner.

Queuerarchy

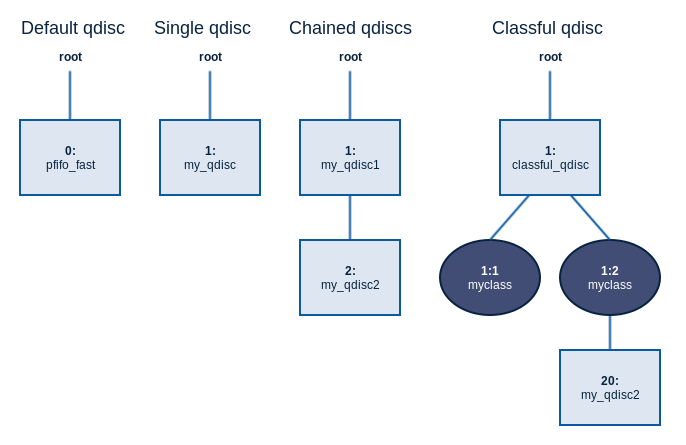

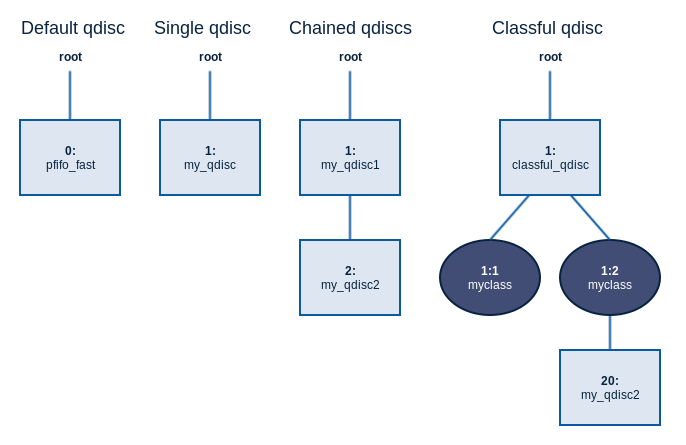

Each interface has a qdisc hierarchy. The first qdisc is attached to the root label and subsequent qdiscs specify the label of their parent.

Some examples of hierarchies are shown in the picture below. It also shows the conventional labels used.

Adding a qdisc to the root of an interface (using tc qdisc add) actually replaces the default configuration shown above. Deleting a root qdisc (using tc qdisc del) actually removes the complete hierarchy and replaces it with the default.

$ tc qdisc add dev eth0 root handle 1: my_qdisc <args>

Queuing disciplines may be chained, with traffic flowing through both, by specifying the label of their predecessor as parent.

$ tc qdisc add dev eth0 root handle 1: my_qdisc1 <args> $ tc qdisc add dev eth0 parent 1: handle 2: my_qdisc2 <args>

Classful queuing disciplines classify their traffic. Each of the traffic classes can be handled in a specific way, through a specific child qdisc.

$ tc qdisc add dev eth0 root handle 1: classful_qdisc <args> $ tc class add dev eth0 parent 1: classid 1:1 myclass <args> $ tc class add dev eth0 parent 1: classid 1:2 myclass <args> $ tc qdisc add dev eth0 parent 1:2 handle 20: my_qdisc2 <args>

Configuring traffic impairments

To impair traffic leaving an interface eth0, we simply overwrite the default qdisc with our own impairment qdisc hierarchy. Which impairment configurations are available and how they can be configured is described in the second part of this series!

More information

- man pages

- tc: http://lartc.org/manpages/tc.html

- tc-<qdisc>: http://lartc.org/manpages/

- tc-netem: http://man7.org/linux/man-pages/man8/tc-netem.8.html

- Linux Advanced Routing & Traffic Control HOWTO, Chapter 9 on Queuing Disciplines, by Bert Hubert at lartc.org

- Describes Traffic Control in the context of routing

- Traffic Control HOWTO, by Martin A. Brown at linux-ip.net

- Full introduction

- Chapter 6 on classless qdiscs, with illustrations

- Chapter 7 on classful qdiscs, with illustrations

- Practical Guide to Linux Traffic Control, by Jason Boxman (link)

- Many examples and commands

- Netem project page at linuxfoundation.org