What happens if you introduce latency to a Remote PHY setup?

It’s been a while since we first explained how to use Linux Traffic Control as an impairment node to introduce latency to a test setup. More recently, the discussion surrounding Low Latency DOCSIS triggered us to investigate what would happen if we used an impairment node to introduce latency between the CCAP Core and a Remote PHY device. The results were surprising!

Impairing traffic and then measuring the effects

In our Remote PHY setup with an RPD Node connected to a CCAP Core, we added an impairment node between the CCAP Core and the RPD. We then configured the impairment node to introduce 1 millisecond of latency both upstream and downstream and started latency measurements using our ByteBlower traffic generator/analyser linked to the same PTP network as the RPD node.

Surprising upstream observations

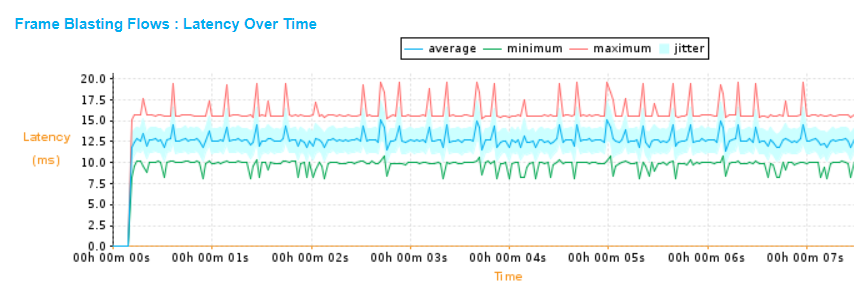

We measured latency over time and recorded minimum, average and maximum latency while sending traffic at different speeds and with different packet sizes. Below are the latency measurements for an upstream flow of 50 Mbps with 66-byte packets with and without extra latency added in the CIN network.

Table 1: Impact of latency in CIN network measured with ByteBlowerNo added latency | 1ms latency | |

min latency (ms) | 5.059 | 8.036 |

avg latency (ms) | 9.482 | 12.731 |

max latency (ms) | 18.551 | 19.635 |

jitter (ms) | 1.733 | 1.663 |

Downstream, we observed that introducing an extra millisecond of latency using the impairment node resulted in a millisecond extra latency measured with ByteBlower. This is as expected and was observed in different traffic rates and packet sizes.

It is upstream that we noted unexpected results: when adding 1 millisecond of latency upstream, three times more latency was measured.

Extra latency explained

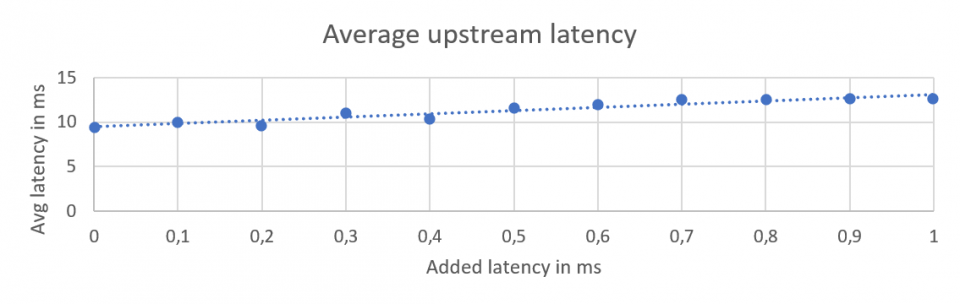

To investigate this further, we gradually increased the latency in steps of 100 µs, starting from zero extra latency and eventually ramping up to 1 millisecond of extra latency while sending 50 Mbps (frame size of 66 bytes) of traffic upstream. The results are shown in the graph below:

he extra latency observed has a simple explanation. To send a packet upstream, a bandwidth request needs to be sent, which suffers from 1 millisecond of latency. The map message containing the grant is also delayed, and finally the upstream packet itself is impacted as well, compounding the latency.

Our results highlight the fact that, with the deployment of remote PHY devices, it becomes crucial to reduce the latency in the network wherever possible.

Keep an eye on our blog posts series to read more on this topic!

Need help testing? Get in touch to hear how Excentis can help.